CRAB asynchronous Stage-out using Rucio¶

CRAB leverages Rucio to transfer files from remote sites to users specify's destination site.

Main differences with respect to previous FTS-based ASO¶

- Files will be in

/store/user/rucio/<yourusername>instead of/store/user/<yourusername>. Same with<groupname> - Files will be owned by Rucio and can be managed with Rucio commands. Not owned by you

- You will not be able to use

rm/cp/mvunix commands, while of course you can read and list those files just like any other CMS data file

Advantages¶

- Rucio will keep retrying the transfers and make sure the files are there for us. Avoiding manual retries.

As a user you can:

- Use Rucio to move or replicate files to another site where you have a quota.

- Manage files as a whole dataset, one dataset for each output file name.

- Reuse the output Container produced by CRAB as a CRAB input dataset, w/o DBS.

Moreover

- (Long advantage list for CRAB and Data Management operations.)

Current Limitation¶

It would not be fair to not talk about limitations, even if some will be overcome in next developments. Here they are:

- Jobs that failed in the

transferringstage cannot be resubmitted. You need to do a "task recovery" (submitting the new task but only for failed jobs). - You cannot use

T3_CH_CERNBOXfor Rucio stageout because CERNBOX is not a CMS resource and can not be manged` by CMS Rucio. - Transferring log files from worker node (

General.transferLogs=True) still not available at the moment. - As with all new features some bugs have not been discovered yet, but it is in active development and they will be fixed as we go.

- We might need to deprecate FTS ASO (default) and migrate all CRAB users to Rucio ASO in the future anyway.

How it works¶

In Rucio files are grouped in "datasets" (which CMS maps to DBS blocks). Dataset can then be grouped in "containers" (which CMS maps to DBS datasets). Containers can be nexted at will and a dataset can belong to multiple containers, these features are new to Rucio with respect to DBS.

When you do a Rucio stage-out and files are transferred to the destination site. CRAB will create one container called Transfer Container and N containers called Publish Container. Both container types contain your output files, but are used for different purposes.

- Transfer Container for managing file transfers from the remote site to the destination site. Used internally in CRAB. All files will be added to this container.

- Publish Containers contain the files that have successfully transferred; there is a separate container for each output files (either from CMSSW or files specified in

JobType.outputFiles).

Data location in Rucio is managed through rules applied to containers. A rule means "keep these data here" where "these data" is a container and "here" is an expression which indicates a (set of) Site(s). In CRAB ASO there is only one site: the storage location defined in the task configuration file at submission time as Site.storageSite (e.g. T2_CH_CERN).

These rules trigger Rucio to transfer files to Site.storageSite. The rule for Transfer Container is meant to make Rucio to transfer the files to the destination site, while the rule for Publish Container is used to lock transferred files in place for you to manage them later.

Multiple rules may apply to a given file (even charging its bytes to multiple quota accounts), when all of them expire, the file is "freed" for Rucio to delete it when it needs space.

Rule attached to Transfer Container have the same lifetime of CRAB task plus 7 days (30+7 days from crab submit time), but Rules attached by CRAB to Publish Containers have no expiration date. You can set a definite expiration date at your convenience later on to tell Rucio that those data are no more needed. That will release the quota to your account.

You are free to manipulate the rules to suit their needs (e.g., set the rule expiration date, delete the rules to free up quota, etc.). But you need at least to kill the task before making a change to Transfer Container to avoid triggering an unpredictable behavior of Rucio ASO.

All files/containers/rules are created in your own scope: user.${RUCIO_ACCOUNT}.

The Rucio Container name¶

As reminder, in CRAB, we have 3 output types, EDM (root file with CMS Schema), TFile from TFileService, and Misc file where you specify extra files you need to collect in JobType.outputFiles.

The Publish Containers name follows these rules:

- If

Data.publication = True, and the output file is EDM, the container name is the DBS output dataset name. - Otherwise,

/FakeDatasetis used as PrimaryDataset name, and the ProcessedDataset part will befakefile-FakeDataset-<TaskHash>with the output file name append to it.

The Transfer Container name is derived from Publish Container name, if there is a file to publish to DBS, CRAB will use the name of Container of that file. Otherwise, names with the prefix /FakeDatset will be used. CRAB also add the suffix _TRANSFER.<TaskHash8th> to make Transfer Container unique for every task.

(<TaskHash> is MD5 hash of task name and <TaskHash8th> is the first 8 characters of hash hexstring)

Example 1¶

- You have one output file: output.root (EDM).

crabConfig.pyhasData.publication = True-

Data.inputDataset = '/GenericTTbar/HC-CMSSW_9_2_6_91X_mcRun1_realistic_v2-v2/AODSIM' -

--> Your will get 2 Rucio containers:

- Publish Container:

- output.root:

/GenericTTbar/<username>-<outputDatasetTag>-<PSetHash>/USER

- output.root:

- Transfer Container:

/GenericTTbar/<username>-<outputDatasetTag>-<PSetHash>_TRANSFER.<TaskHash8th>/USER

- Publish Container:

Example 2¶

- 2 output files: output.root (EDM), myfile.txt (Misc)

Data.publication = True-

Same

Data.inputDatasetas Example 1 -

--> You will get 3 Rucio containers,

- Publish Container:

- output.root:

/GenericTTbar/<username>-<outputDatasetTag>-<PSetHash>/USER - myfile.txt:

/FakeDataset/fakefile-FakePublish-<TaskHash>_myfile.txt/USER

- output.root:

- Transfer Container:

/GenericTTbar/<username>-<outputDatasetTag>-<PSetHash>_TRANSFER.<TaskHash8th>/USER

- Publish Container:

Example 3¶

- 2 output files, output.root (EDM), myfile.txt (Misc,

JobType.outputFiles) - This time,

Data.publication = False. -

Same

Data.inputDatasetas Example 1 -

--> You will get 3 Rucio containers,

- Publish Container:

- output.root:

/FakeDataset/fakefile-FakePublish-<TaskHash>_output.root/USER - myfile.txt:

/FakeDataset/fakefile-FakePublish-<TaskHash>_myfile.txt/USER

- output.root:

- Transfer Container:

/FakeDataset/fakefile-FakePublish-<TaskHash>_TRANSFER.<TaskHash8th>/USER

- Publish Container:

Example 4¶

- 2 output files, output.root (EDM), secondoutput.root (EDM)

Data.publication = False. CRAB will reject the submission if it isTrue.-

Same

Data.inputDatasetas Example 1 -

--> You will get 3 Rucio containers,

- Publish Container:

- output.root:

/FakeDataset/fakefile-FakePublish-<TaskHash>_output.root/USER - secondoutput.root:

/FakeDataset/fakefile-FakePublish-<TaskHash>_secondoutput.root/USER

- output.root:

- Transfer Container:

/FakeDataset/fakefile-FakePublish-<TaskHash>_TRANSFER.<TaskHash8th>/USER

- Publish Container:

Example with crabConfig.py¶

Given CRAB configuration (same setting with Example 2):

from WMCore.Configuration import Configuration

config = Configuration()

config.section_('General')

config.General.transferLogs = False

config.General.requestName = 'example2'

config.section_('JobType')

config.JobType.maxJobRuntimeMin = 60

config.JobType.pluginName = 'Analysis'

config.JobType.psetName = 'pset.py'

config.JobType.outputFiles = ['myfile.txt']

config.section_('Data')

config.Data.splitting = 'LumiBased'

config.Data.unitsPerJob = 1

config.Data.outputDatasetTag = 'ruciotransfer'

config.Data.outLFNDirBase = '/store/user/rucio/tseethon/'

config.Data.publication = True

config.Data.totalUnits = 10

config.Data.inputDataset = '/GenericTTbar/HC-CMSSW_9_2_6_91X_mcRun1_realistic_v2-v2/AODSIM'

config.section_('User')

config.section_('Site')

config.Site.storageSite = 'T2_CH_CERN'

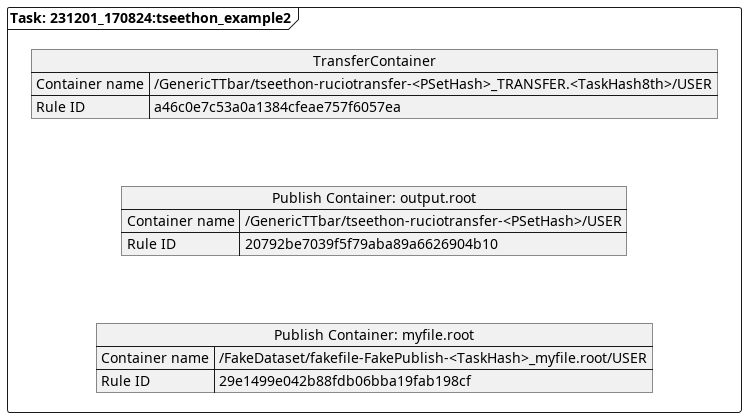

When the task is submitted to Schedd, and Rucio ASO process starts running, you will have containers and rules in Rucio like this:

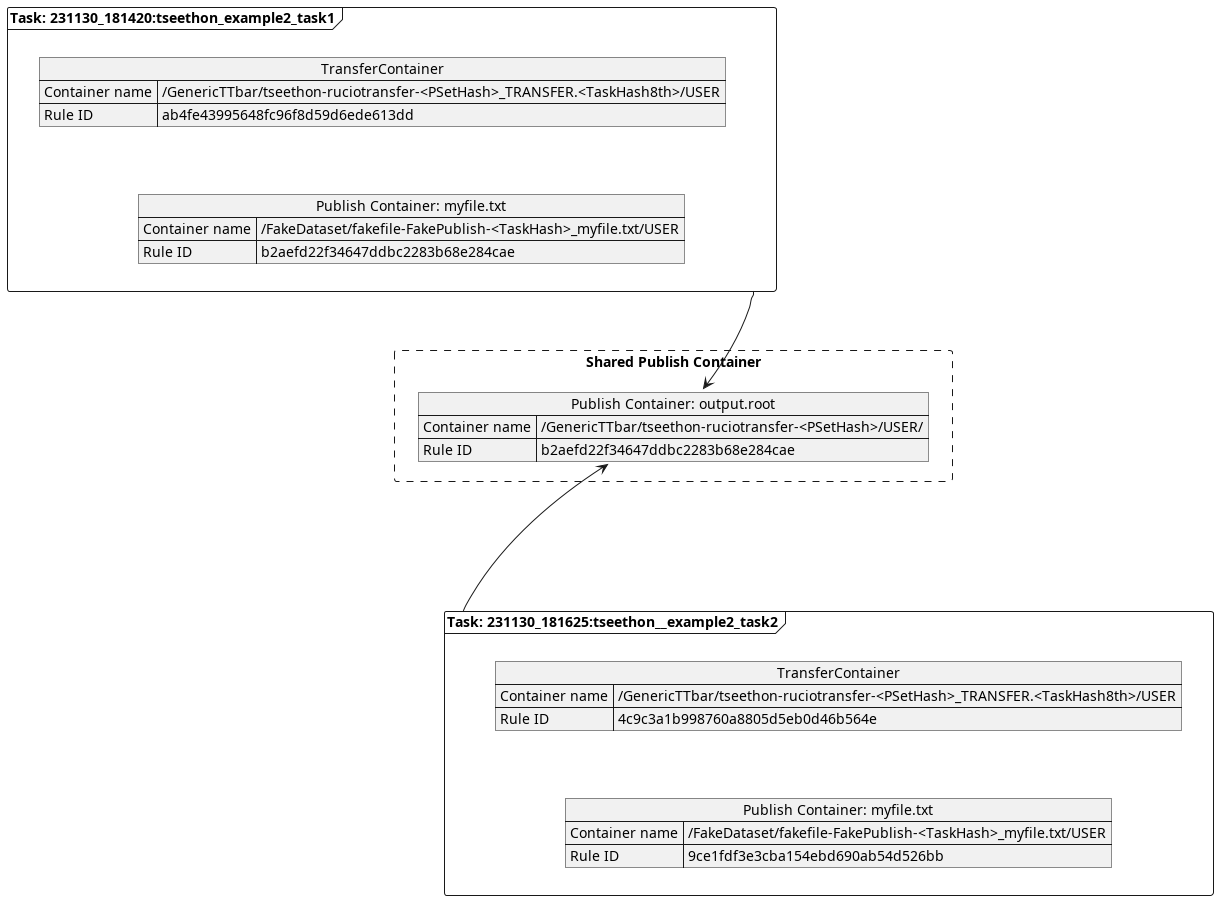

"Publishing to the same DBS dataset"¶

In case you split the input dataset into multiple crab tasks,

it is still possible to put together outouts in the same DBS dataset and in the same

Rucio Publish Container. But only for files which will be published in DBS

and for tasks which have the same Data.outputDatasetTag. That tag is exactly what allows CRAB

to tell that files from multiple tasks must end up in the same place !

Where are my files?¶

LFN is changed, from usual /store/{user,group}/${username} to /store/{user,group}/rucio/${RUCIO_ACCOUNT} where ${RUCIO_ACCOUNT} is your account name for /store/user and Rucio group account for /store/group.

Now files are own by Rucio and you can only read it. See FAQs for more information.

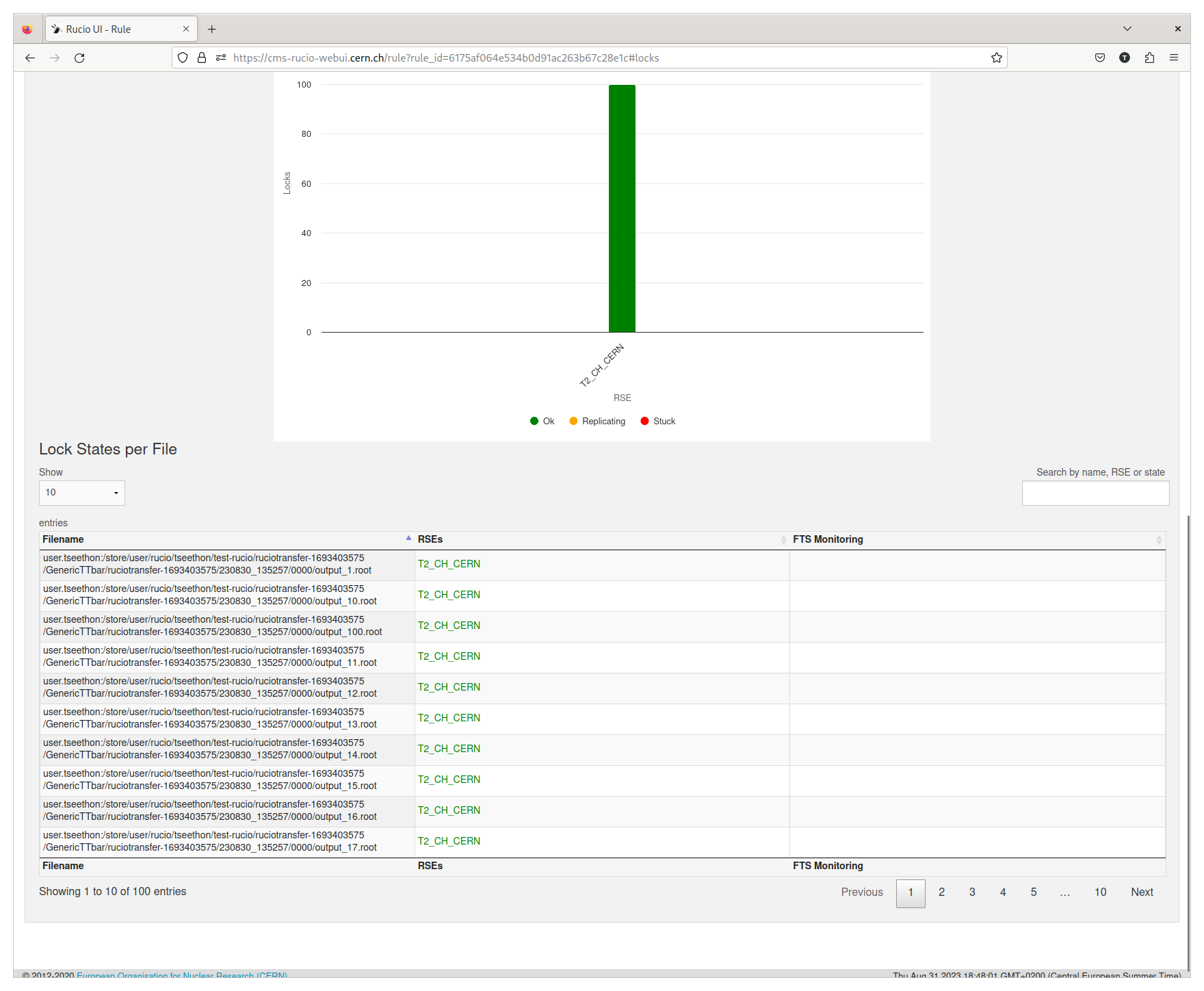

Monitoring transfer status¶

You need to monitor the Transfer Container's rule via CLI (rucio rule-info ruleID) or WebUI (e.g., https://cms-rucio-webui.cern.ch/rule?rule_id=4c7a5025378d420288b418017fc23f18, replace ${id} in rule_id=${id} part with your rule ID).

Rule/Lock State¶

When we attach the rule to the container, Rucio creates Lock object for each file to track the status of the replica.

Lock can be in one of 3 states,

- OK: The file is transferred completed to the destination site (enforced by the replication rule).

- REPLICATING: The file is transferring. On Rucio WebUI's Rule page, you can click on files to get the FTS URL and see the transfer log (Rucio submits FTS jobs to transfer files between sites).

- STUCK: The file failed to transfer. Rucio will retry later.

Rule itself has similar status:

- OK: All locks are OK.

- REPLICATION: In file transferring state. One or more locks are REPLICATING.

- STUCK: There is at least one lock in STUCK status. Rucio will pick locks in this status and try to transfer them again later.

- SUSPENDED: After a rule is STUCK for 14 days, Rucio gives up. No more transfer attempts for this rule.

SUSPENDED rules can be kicked back in STUCK status by operators so that Rucio tries again.

Rucio does its best to satisfy the rule and make sure all files in Rucio container appear on the destination site. That means when the rule/lock gets stuck, Rucio keeps re-trying until the timeout is reached (up to 14 days).

How does it works internally?¶

- When the job is in the "transferring" stage, PostJob sends the list of "files need to transfer to destination" to "Rucio asynchronous stageout process" ("ASO" for short).

- The first time ASO machinery:

- creates the "Transfer Container", and attaches a replication rule to it.

- creates "Publish Container"'s as needed and their replication rules with the same expression as Transfer Container.

- ASO reads the list of files, registers their names in Rucio and define for each a replica in the RSE_Temp of the execution site.

- ASO adds newly added files to the Transfer Container. With the replication rule ASO defined earlier, Rucio triggers the transfers of files to the destination.

- Note that we cannot add files directly to the container. We need the Rucio "dataset" (equivalent to DBS Block in CMS term) to contain these files, and then we attach the Rucio dataset to the Rucio container. A new dataset is created every 100 files.

- When the state of the file (lock) changes to "OK", ASO notifies PostJob that the transfer for the files associated with the job is now completed. So PostJob can mark the job as "finished".

- ASO also adds "OK" files to the Publish Container in parallel. This way, we can guarantee that all files in Publish Container are already at the destination site, whereas Transfer Container can have some files that have not been transferred to the destination site (yet).

- Note that Publish Container has its rule as well. We set the rule the same as Transfer Container, but because files are already there, so the rule always has "OK" state.

- ASO will keep running steps 3-6 until task is completed or timeout is reached.

- Timeout is currently 7 days after the end life of the DAG, i.e. once all job (re)submission has stopped, we wait for up to 7 days before giving up.

- When ASO stops due to timeout:

- rule for Transfer Container expires at same time, Rucio will not try anymore to transfer files.

- some transfer may be in progress (e.g. Rucio submitted a requesto to FTS which is still queued or retrying). If those transfers eventually complete and file appear at the destination RSE, they will not be added to Rucio datasets/containers, will not have a rule on them and will not be published in DBS (if relevant).

- In any case when ASO completes:

- Containers and datasets will remain in Rucio database (like datasets in DBS, names are never removed from Rucio)

- Rules on Publication containers will stay there

Quick comparison with FTS Stageout¶

| kind | FTS | Rucio |

|---|---|---|

| LFN path | /store/user/ |

/store/user/rucio/ |

| Quota | Site (Site Admin) | Rucio (CMS Data Management) |

| File owner | User | Rucio |

| Monitoring transfers | FTS logs/WebUI | Rucio WebUI, CLI |

| Manage file | Usual unix tool rm/cp |

Create/remove rule. (users have read-only access to their files). |